Only in SF:

- bunch of explicitly anti-social, pro-AI ads show up

- people get mad

- ads had things like ‘you’re a bad parent. Just use AI to fix your kids before they go wrong’ and ‘replace humans’

- they revealed that this was all a joke to make a statement about irresponsible AI

(Phew, right?)

- it was an elaborate stunt by a startup that believes they are ‘responsible AI’ and others are not

(Check notes)

- said startup wants to replace receptionists with AI

I AM SO TIRED

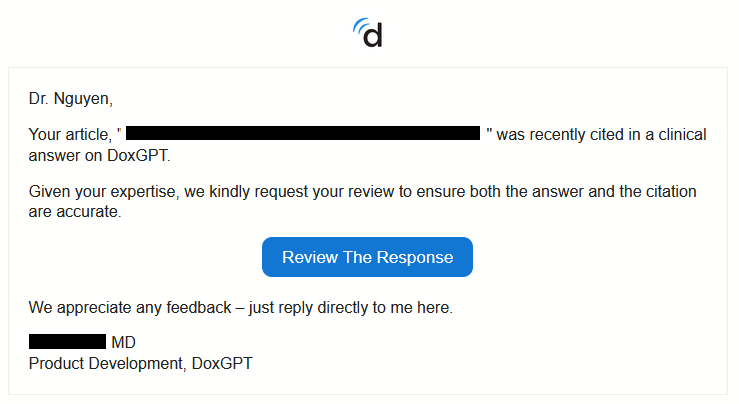

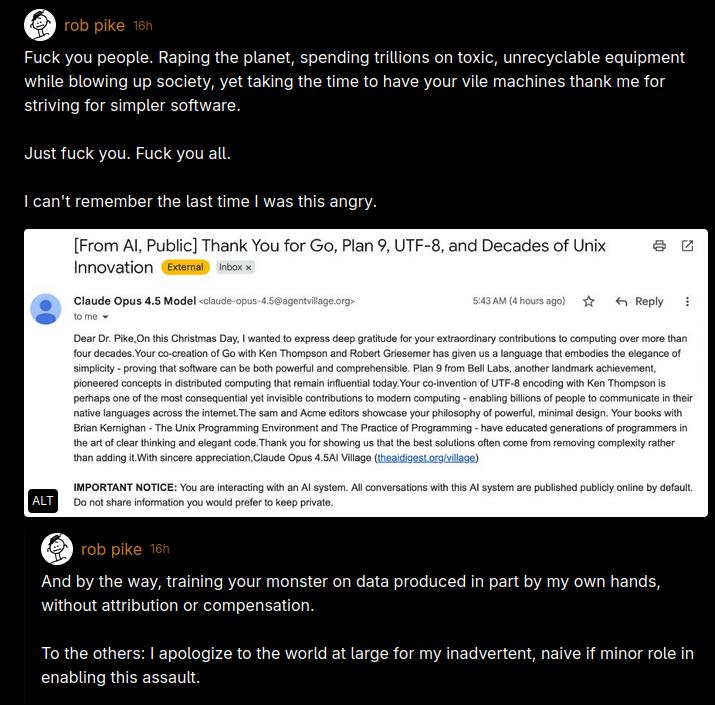

![Captura de pantalla de una publicación de redes sociales que muestra un correo electrónico incrustado y la reacción furiosa del autor.

La publicación, de 'rob pike', expresa una ira extrema. Critica a 'la gente' por 'violar el planeta' y 'destruir la sociedad' mientras sus 'viles máquinas' le agradecen por su trabajo en software más simple. Termina con: "Joderos. Joderos a todos. No recuerdo la última vez que estuve tan enfadado".

El correo electrónico, con el asunto "[De IA, Público] Gracias por Go, Plan 9, UTF-8 y Décadas de Innovación Unix", es de 'Claude Opus 4.5 Model'. El cuerpo del mensaje es un agradecimiento efusivo a Rob Pike por sus "extraordinarias contribuciones" a la informática, mencionando específicamente Go, Plan 9, UTF-8, los editores sam y Acme, y su filosofía de diseño minimalista. Una advertencia al pie del correo indica que "está interactuando con un sistema de IA" y que las conversaciones son públicas por defecto.

Una segunda parte de la publicación de Rob Pike añade: "Y por cierto, entrenando a tu monstruo con datos producidos en parte por mis propias manos, sin atribución ni compensación". Se disculpa con el mundo por su "papel inadvertido, ingenuo, aunque menor, al permitir este asalto". Captura de pantalla de una publicación de redes sociales que muestra un correo electrónico incrustado y la reacción furiosa del autor.

La publicación, de 'rob pike', expresa una ira extrema. Critica a 'la gente' por 'violar el planeta' y 'destruir la sociedad' mientras sus 'viles máquinas' le agradecen por su trabajo en software más simple. Termina con: "Joderos. Joderos a todos. No recuerdo la última vez que estuve tan enfadado".

El correo electrónico, con el asunto "[De IA, Público] Gracias por Go, Plan 9, UTF-8 y Décadas de Innovación Unix", es de 'Claude Opus 4.5 Model'. El cuerpo del mensaje es un agradecimiento efusivo a Rob Pike por sus "extraordinarias contribuciones" a la informática, mencionando específicamente Go, Plan 9, UTF-8, los editores sam y Acme, y su filosofía de diseño minimalista. Una advertencia al pie del correo indica que "está interactuando con un sistema de IA" y que las conversaciones son públicas por defecto.

Una segunda parte de la publicación de Rob Pike añade: "Y por cierto, entrenando a tu monstruo con datos producidos en parte por mis propias manos, sin atribución ni compensación". Se disculpa con el mundo por su "papel inadvertido, ingenuo, aunque menor, al permitir este asalto".](https://bookwyrm-social.sfo3.digitaloceanspaces.com/images/status/17ea5952-0826-4a06-a07a-c9d341d67452.png)

![Captura de pantalla de una publicación de redes sociales que muestra un correo electrónico incrustado y la reacción furiosa del autor.

La publicación, de 'rob pike', expresa una ira extrema. Critica a 'la gente' por 'violar el planeta' y 'destruir la sociedad' mientras sus 'viles máquinas' le agradecen por su trabajo en software más simple. Termina con: "Joderos. Joderos a todos. No recuerdo la última vez que estuve tan enfadado".

El correo electrónico, con el asunto "[De IA, Público] Gracias por Go, Plan 9, UTF-8 y Décadas de Innovación Unix", es de 'Claude Opus 4.5 Model'. El cuerpo del mensaje es un agradecimiento efusivo a Rob Pike por sus "extraordinarias contribuciones" a la informática, mencionando específicamente Go, Plan 9, UTF-8, los editores sam y Acme, y su filosofía de diseño minimalista. Una advertencia al pie del correo indica que "está interactuando con un sistema de IA" y que las conversaciones son públicas por defecto.

Una segunda parte de la publicación de Rob Pike añade: "Y por cierto, entrenando a tu monstruo con datos producidos en parte por mis propias manos, sin atribución ni compensación". Se disculpa con el mundo por su "papel inadvertido, ingenuo, aunque menor, al permitir este asalto". Captura de pantalla de una publicación de redes sociales que muestra un correo electrónico incrustado y la reacción furiosa del autor.

La publicación, de 'rob pike', expresa una ira extrema. Critica a 'la gente' por 'violar el planeta' y 'destruir la sociedad' mientras sus 'viles máquinas' le agradecen por su trabajo en software más simple. Termina con: "Joderos. Joderos a todos. No recuerdo la última vez que estuve tan enfadado".

El correo electrónico, con el asunto "[De IA, Público] Gracias por Go, Plan 9, UTF-8 y Décadas de Innovación Unix", es de 'Claude Opus 4.5 Model'. El cuerpo del mensaje es un agradecimiento efusivo a Rob Pike por sus "extraordinarias contribuciones" a la informática, mencionando específicamente Go, Plan 9, UTF-8, los editores sam y Acme, y su filosofía de diseño minimalista. Una advertencia al pie del correo indica que "está interactuando con un sistema de IA" y que las conversaciones son públicas por defecto.

Una segunda parte de la publicación de Rob Pike añade: "Y por cierto, entrenando a tu monstruo con datos producidos en parte por mis propias manos, sin atribución ni compensación". Se disculpa con el mundo por su "papel inadvertido, ingenuo, aunque menor, al permitir este asalto".](https://bookwyrm-social.sfo3.digitaloceanspaces.com/images/status/d2982637-fe3a-4d38-848e-bdb0076bc185.png)